Acoustic Source Localization via Multi-Sensor Arrays

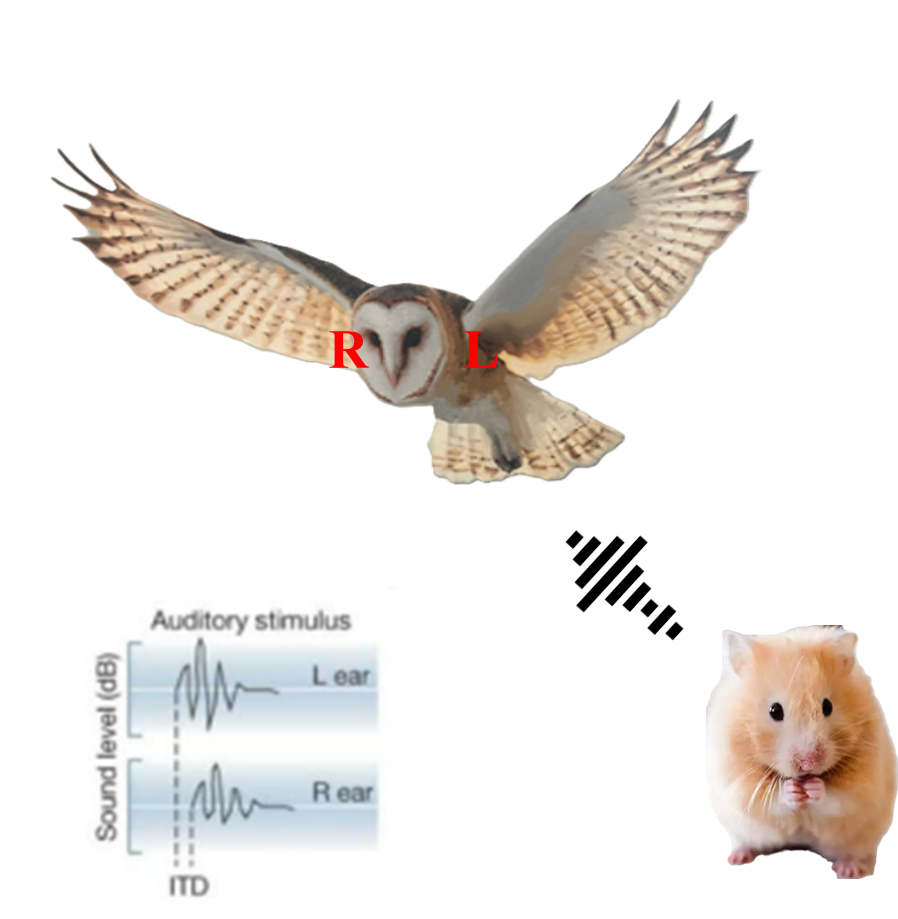

The ability to locate where a sound is coming from is crucial not only for humans and animals, but also for intelligent machines and robots. Humans naturally use the difference in time and loudness of sounds reaching each ear to figure out the direction of a sound. Animals like owls have developed even more specialized auditory systems?using unique ear shapes and brain circuits?to pinpoint prey with incredible accuracy, even in the dark. Inspired by these amazing biological systems, our research aims to develop a robust three-dimensional sound localization system using a multi-sensor microphone array.

Biological Inspiration

Humans rely on two main cues to localize sound: Interaural Time Difference (ITD)and Interaural Level Difference (ILD). By detecting tiny differences in when a sound arrives at each ear, and how loud it is on each side, we can sense whether a sound is to our left, right, or somewhere in between. Animals like barn owls take this even further?their ears are asymmetrically placed, and their brains have specialized neurons that can detect microsecond differences, letting them hunt in complete darkness. These principles have provided a blueprint for building artificial systems that can hear the direction of sounds

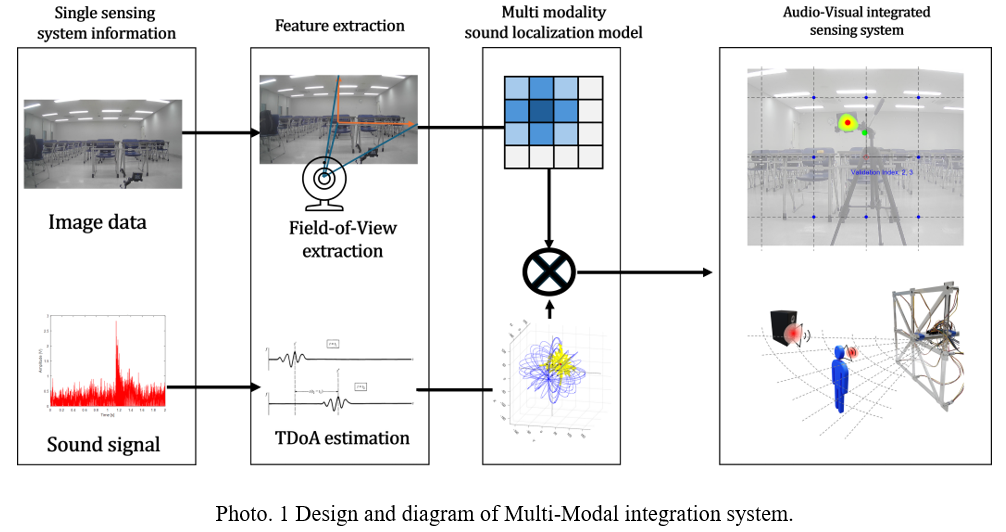

How Our System Works

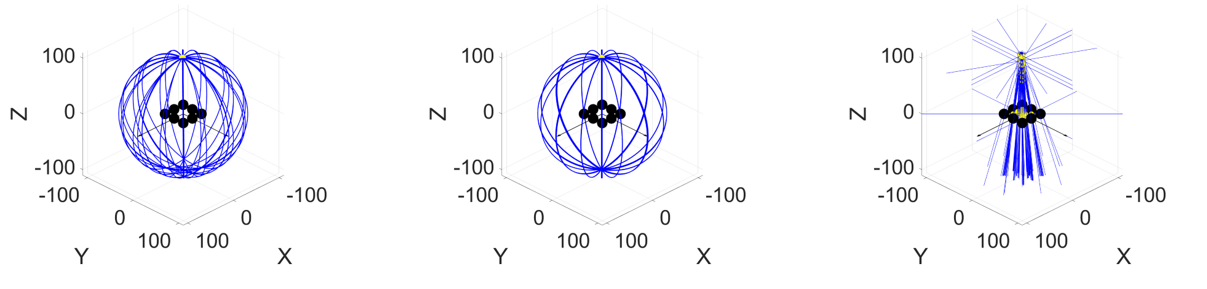

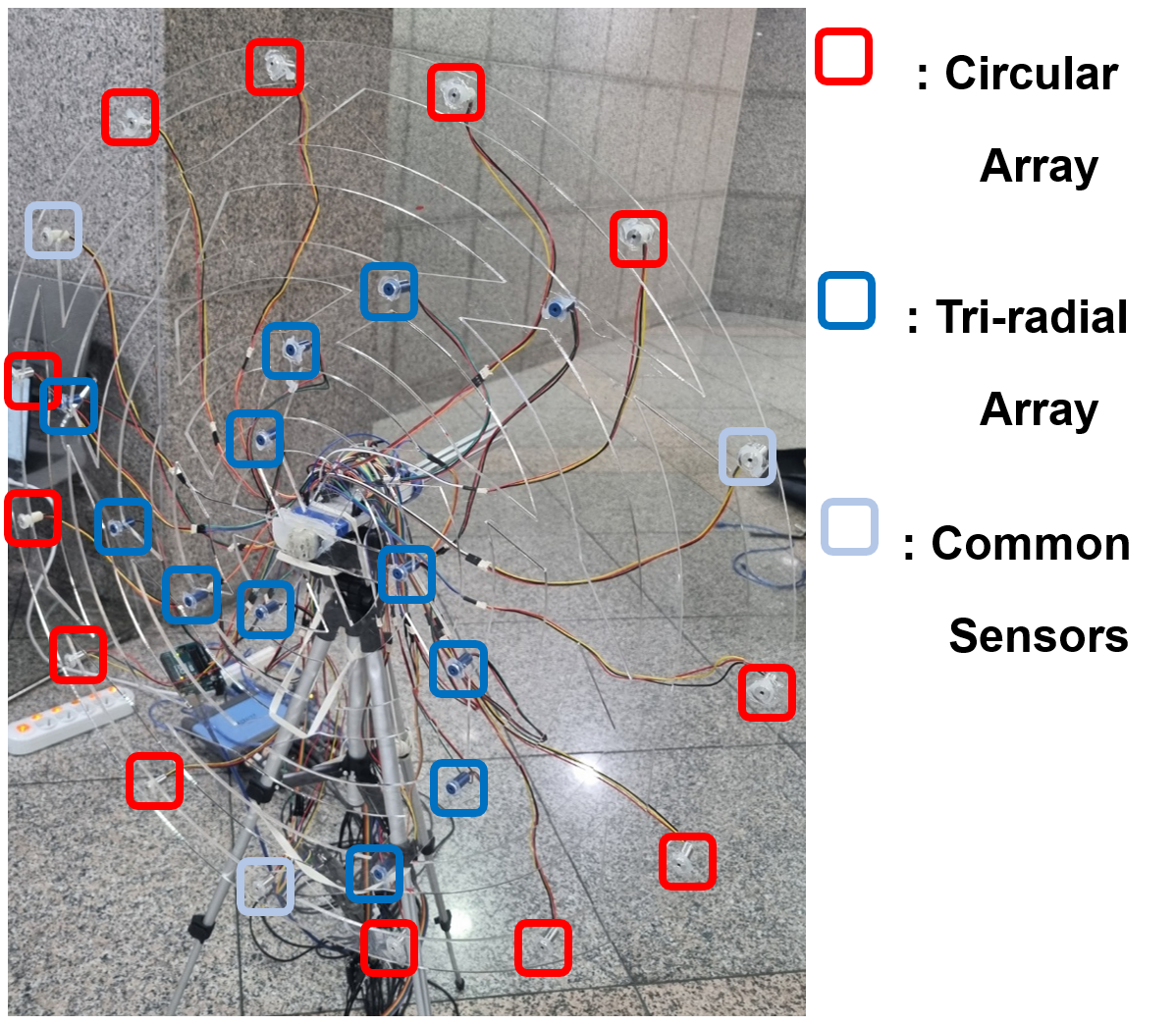

Inspired by these natural mechanisms, we built a system using an array of eight microphones, each working like an artificial ear. When a sound occurs, it reaches each microphone at slightly different times. By precisely measuring these Time Differences of Arrival (TDoA)using advanced signal processing techniques (like cross-correlation and GCC-PHAT), we can estimate where the sound came from in 3D space.

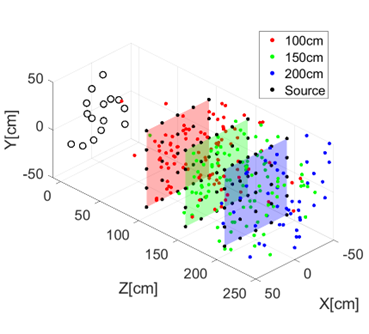

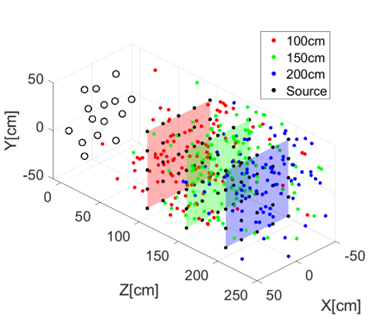

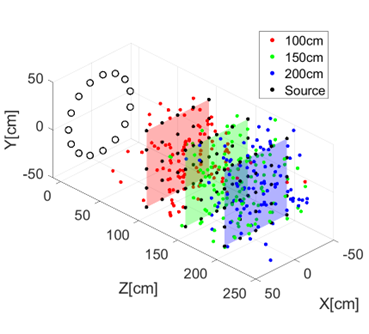

To turn these timing differences into an actual position, we use mathematical models?such as line-based, ring-based, and hyperboloid-based methods?which calculate possible locations for the sound source. By finding where the results from all microphones agree, our system can accurately determine both the direction and distance to the sound.

Real-World Testing

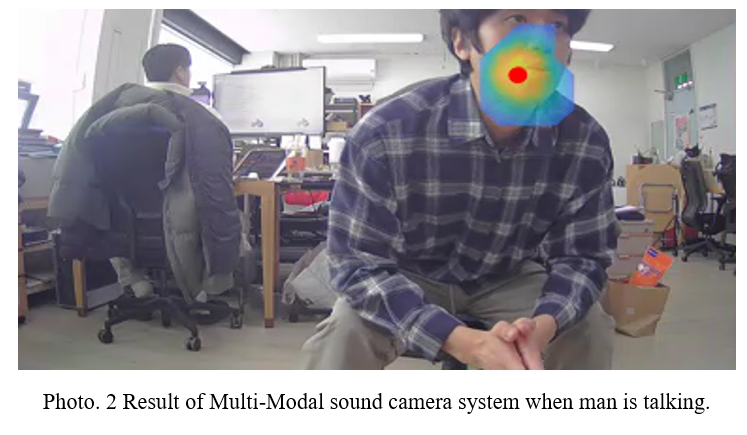

We tested our system in various real-world indoor environments, using different sounds (like voices, claps, and beeps). The results showed that our approach remains robust and accurate even with background noise or echo. Our technology is now ready to be applied in intelligent robots, surveillance systems, and even real-time sound cameras that overlay audio direction on video.

The framework operates at a sampling rate of 100 kHz, enabling analysis at a rate of 5 to 10 frames per second (fps), thereby achieving real-time tracking of sound sources in conjunction with visual imagery. By integrating acoustic data with camera calibration, the developed system visualizes acoustic source locations through intensity-based contour mapping directly correlated to sound levels. This innovative Sound Camera system accurately projects acoustic localization onto the camera's field of view, identifying high-probability sound source locations and enabling intuitive, multimodal representations.

Experimental validation conducted in diverse environmental conditions confirms that the proposed system consistently achieves angular localization accuracies within 5 degrees, with average errors typically below 5 degrees and minimal variance. This comprehensive integration of high-resolution audio processing and visual mapping establishes a robust real-time sound localization solution, offering significant potential for enhancing intelligent vehicle systems and surveillance technologies.